The Learnix Operating System

"If you can't explain it simply, you don't understand it well enough." - Albert Einstein

Hello there!1

In this book we are going to write and learn about operating systems together!

We are going to implement an entire POSIX compliant OS in Rust and not use ANY2 external libraries. All of the thought process, code and implementations will be explained and documented here as well as in this repo which all the code snippets are from.

Note: ALL the syntax highlighting of the Rust code is custom and created by me! If you see any bugs, please write in the comments or submit an issue.

Base Knowledge

This book will be technical, and will assume a little bit of a programming knowledge background, but not necessarily in Rust.

If you are not coming from a low level programming knowledge that's fine!

Just make sure you know this stuff or learn it as you read. Also, if in any place of this book I take some things for granted, please, open an issue and let me know so I can explain it better.

Some base knowledge that you will need to have:

-

Some assembly knowledge. (just understand simple

movsand arithmetic operations at a very basic level3) -

Some knowledge on memory. (what's a pointer, what's an address)

-

Knowledge in Rust is not that important, but knowing at least one programming language is. I myself have some more learning in Rust, and in this book I will also explain some great features that it has!

-

A lot of motivation to learn and understand because it is a complex subject.

Roadmap of this book

-

Compiling a standalone binary

-

Boot loading, Debugging, stages and some legacy stuff

-

Important CPU modes and instructions

-

Paging, writing our own malloc

-

Utilizing the Interrupt Descriptor Table

-

File systems and Disk Drivers

-

Thinking in terms of processes

-

Writing a shell

-

Running our first program! (Which of course will be Doom)

-

To be continued (Hopefully a virtualization section and loading a VM of another OS)

-

Definitely not a star wars reference ↩

-

Only libraries that remove boilerplate code will be used (And obviously be explained). ↩

-

This is only relevant to the starting stages and some optimizations, and probably a day of learning will be enough ↩

Roadmap

This document specifies the planned topics and features that will be developed in the LearnixOS and covered by this book.

Each topic has a corresponding issue both in the book and in the OS repository. The status of each topic can be tracked using the links provided in the tables below.

Note: This list is definitely not final, and more topics will be added as the development continues.

Bootloader

| Feature/Topic | Status in OS | Status in Book |

|---|---|---|

| Enable A20 | ✓Implement A20 Line Before PublicationDone | ✓Enable A20 Before PublicationDone |

| Read kernel from disk | ✓Read Kernel From Disk Before PublicationDone | ✓Read Kernel From Disk Before PublicationDone |

| Load and Initialize global descriptor table | ✓Load and initialize GDT Before PublicationDone | ✓Load and Initialize GDT Before PublicationDone |

| Enable Paging | ✓Enable Paging Before PublicationDone | ✓Enable Paging Before PublicationDone |

Memory Management

| Feature/Topic | Status in OS | Status in Book |

|---|---|---|

| Obtaining Memory map | ✓Obtain Memory Map Before PublicationDone | ⊙Obtain Memory Map Before Publication |

| Bitmap Page Allocator | ✓Bitmap Page Allocator Before PublicationDone | ⊙Bitmap Page Allocator Before Publication |

| Mapping Virtual Memory | ✓Mapping Virtual Memory Before PublicationDone | ⊙Mapping Virtual Memory Before Publication |

| Slab Allocator | ⊙Slab Allocator Before PublicationIn Progress | ⊙Slab Allocator Before Publication |

| Virtual Memory Allocator | ⊙Virtual Memory Allocator After Publication | ⊙Virtual Memory Allocator After Publication |

Interrupt Handling

| Feature/Topic | Status in OS | Status in Book |

|---|---|---|

| Load and Initialize interrupt descriptor table | ✓Load and Initialize the IDT Before PublicationDone | ⊙Load and Initialize IDT Before Publication |

| Interrupt handlers and the PIC | ✓Interrupt Handlers and the PIC Before PublicationDone | ⊙Interrupt Handlers and the PIC Before Publication |

| Timer interrupt | ✓Timer Interrupt Before PublicationDone | ⊙Timer Interrupt Before Publication |

I/O & Drivers

| Feature/Topic | Status in OS | Status in Book |

|---|---|---|

| VGA driver | ✓VGA Driver Before PublicationDone | ⊙VGA Driver Before PublicationDone |

| Keyboard driver | ⊙Keyboard Driver Before PublicationDone | ⊙Keyboard Driver Before Publication |

| Memory mapped IO and Port mapped IO | X | ⊙Memory Mapped IO and Port Mapped IO Before Publication |

| Scanning PCI bus | ⊙Scanning the PCI bus Before PublicationDone | ⊙Scanning the PCI bus Before Publication |

| AHCI driver | ⊙AHCI Driver Before PublicationIn Progress | ⊙AHCI Driver Before Publication |

File System

| Feature/Topic | Status in OS | Status in Book |

|---|---|---|

| FAT32 | ⊙FAT32 File System After Publication | ⊙FAT32 File System After Publication |

Process Management

| Feature/Topic | Status in OS | Status in Book |

|---|---|---|

| Spinlock mutex | ⊙Spinlock Mutex Before PublicationIn Progress | ⊙Spinlock Mutex Before Publication |

Utilities

| Feature/Topic | Status in OS | Status in Book |

|---|---|---|

| Debugging Tips | X | ⊙Debugging Tips Before PublicationDone |

| Build and Run the project | X | ⊙Build and Run the project Before Publication |

| Common Error with Hardware | X | ⊙Common Errors with Hardware Before Publication |

| Tests | ⊙Tests Before Publication | ⊙Tests Before Publication |

Chapter 1

"the journey of a thousand miles begins with one step." - lao tzu

Let's start our Operating System journey! There is a lot to learn, but every journey has to start somewhere :)

In this chapter we discuss:

- How to make a standalone1 binary

- How to make our binary bootable

-

A binary that doesn't require an operating system. ↩

Making a Standalone Binary

"Machines take me by surprise with great frequency." - Alan Turing

The first step in making our operating system, is to make a program that can be compiled, and executed, without any dependency.

This is not a straight forward task, because every program that we use in our daily life uses at least one, very important dependency, The Standard Library

This library is some of the time provided by the operating system itself, for example libc for the linux operating system, or the winapi for windows operating system, and most of the time it is wrapped around by our programming languages.

It name may vary per language, but here are some popular names:

Rust -> std::*

C++ -> std::*

C -> stdlib.h, libc.so

Python -> Modules like os, sys, math

Java -> java.*, javax.*

Go -> fmt, os

This library is linked1 to our code by default, and provides us with the ability to access our operating system.

Most of the time, programming languages tend to add additional functionality to their standard library. For example, the Rust Standard library, adds the println! macro for printing to screen, smart collections like a Vec, or a LinkedList, as well as Box for safe memory management, a lot of useful traits, very smart iterators and much much more!

Unfortunately, we won't have this luxury of a library and we will need to implement it all ourselves!

But don't worry, Rust has an ace up it sleeve and it provides with the fantastic Core library, which is a dependency free base for the standard library, and more over, it provides us with traits, and structures that can be linked into our own os, for example, once we write our memory allocator2, we could create a Vec from the core library, and we can tell it to use our own allocator!

So without further ado, Let's get started!

Making a Rust Project

First, make sure you have rust, installation instruction can be found here

Afterwards, you can create the project with the following command

$ cargo init <project_name>

$ cd <project_name>

If you have done everything correct, you project should look like this

<project_name>/

|-- Cargo.toml

|-- src

|- main.rs

and the main file, should look something like this:

fn main() {

println!("Hello, world!");

}This can easily be run on you computer with cargo run but, because you are running it on a regular computer, with a functioning operating system it uses the standard library.

Note: In all of this project we are using the nightly distribution of Rust. This is because some features that we are going to use in the future are not yet stable, and only available in nightly.

There are two way to include it, the first one, is to add

+nightlyon every cargo command, for example:$ cargo +nightly buildThe second option, is to create a file which will hold our default toolchain

[toolchain] channel = "nightly"This book uses the second approach.

Ignoring the Standard Library

As mentioned before we don't want to depend on the standard library because it is meant for an already existing operating systems. To ignore it, simply add #![no_std] on the top of our main file, this attribute tells the compiler that we don't want to use the standard library.

Now, if we then try to compile our crate, we get this error massage:

error: cannot find macro `println` in this scope

--> src/main.rs:4:5

|

4 | println!("Hello, world!");

| ^^^^^^^

error: `#[panic_handler]` function required, but not found

error: unwinding panics are not supported without std

|

= help: using nightly cargo,

use -Zbuild-std with panic="abort" to avoid unwinding

= note: since the core library is usually precompiled with panic="unwind",

rebuilding your crate with panic="abort"

may not be enough to fix the problem

When breaking this error down we see there are 3 main errors

- Cannot find macro

println #[panic handler]function is required- Unwinding panics are not supported without std.

The first error is more obvious, because we don't have our standard library, the println does not exist, so we simply need to remove the line that uses it, the other errors will require their own section.

Defining a Panic Handler

Rust doesn't offer a standard exception like other languages, for example, in python an exception could be raised like this

def failing_function(x: str):

if not isinstance(x, str):

raise TypeError("The type of x is not string!")

Instead, Rust provides us with the panic! macro, which will call the Panic Handler Function. This function is very important and it will be called every time the panic! macro will be invoked, for example:

fn main() {

panic!("This is a custom message");

}Normally, the Standard Library provides us with an implementation of the Panic Handler Function, which will typically print the line number, and file in which the error occurred. But, because we are now not using the Standard Library, we need to define the implementation of the function ourselves.

This function can be any function, it just have to include the attribute #[panic_handler], this attribute is added, so the compiler will know which function to use when invoking the panic! macro, to enforce that only one function of this type exists, and to also enforce the input argument and the output type.

If we create an empty function for the panic handler, we will get this error:

error[E0308]: `#[panic_handler]` function has wrong type

--> src\main.rs:10:1

|

10 | fn panic_handler() {}

| ^^^^^^^^^^^^^^^^^^ incorrect number of function parameters

|

= note: expected signature `for<'a, 'b> fn(&'a PanicInfo<'b>) -> !`

found signature `fn() -> ()

This means that it wants our function will get a reference to a structure called PanicInfo and will return the ! type.

But what is this struct? and what is this weird type?

The PanicInfo struct, includes basic information about our panic, such as the location, and message, and it's definition can be found in the core library

pub struct PanicInfo<'a> {

message: &'a fmt::Arguments<'a>,

location: &'a Location<'a>,

can_unwind: bool,

force_no_backtrace: bool,

}The ! type is a very special type in rust, called the never type, as the type name may suggest, it says that a function that return the ! type, should never return, which means our program has come to an end.

In a normal operating system, this is not a problem, just print the panic message + the location and kill the process, so it would not return. But in our own os unfortunately, this is not possible because there is not a process that we can exit. So, how can we prove to Rust we are not returning? by endlessly looping!

So at the end, this is the definition of our handler, which results in the following code

#![no_std]

fn main() {

}

#[panic_handler]

pub fn panic_handler(_info: &core::panic::PanicInfo) -> ! {

loop {}

}This code unfortunately still doesn't compile, because we didn't handle the last error

Some IDE's will still show an error, if you are like me, and want it to disappear, expand the following

Some IDE's like VSCode, and Zed that uses rust-analyzer, will throw an error that the panic_handler function already exists.

found duplicate lang item `panic_impl`

the lang item is first defined in crate `std` (which `test` depends on)

...

Each IDE has it's own way to configure rust-analyzer to to ignore these, a fixes for VSCode and Zed is shown below

VSCode

{

"rust-analyzer.check.allTargets": false,

"rust-analyzer.cargo.target": "x86_64-unknown-linux-gnu",

}

Zed

{

"lsp": {

"rust-analyzer": {

"initialization_options": {

"cargo": {

"target": "x86_64-unknown-linux-gnu"

},

"check": {

"allTargets": false

}

}

}

}

}

This works, because it tells rust-analyzer to use a target that doesn't include the standard library, and not to check other targets except the one we specified.

Note: You might have to install the target with

rustup add target x86_64-unknown-linux-gnu

What is Unwinding and How to Disable It

In a normal rust execution environment, when a program panics, it means that it has encountered an unrecoverable error

This means, that all of the memory should be cleaned up, so a memory leak doesn't occur. This is where unwinding comes in.

When a rust program panics, and the panic strategy is to unwind, rust goes up the stack of the program, and cleans up the data from each function that it encounters. However, walking back and cleaning up is a lot of work. Rust, therefore, allows you to choose the alternative of immediately aborting, which ends the program without cleaning up. This alternative is also useful in our case, where we don't have the sense of "cleaning up", because we still doesn't have an operating system.

So, to simply switch the panic strategy to abort, we can add the following line to our Cargo.toml file:

[profile.dev]

panic = "abort"

[profile.release]

panic = "abort"

After we disabled unwinding, we can now, hopefully try to compile our code!

But, by running cargo run we get the following error

error: using `fn main` requires the standard library

|

= help: use `#![no_main]` to bypass the Rust generated entrypoint

and declare a platform specific entrypoint yourself,

usually with `#[no_mangle]`

As per usual, the rust compiler errors are pretty clear, and they tell us exactly what we need to do to fix the problem. In this case, we need to add the #![no_main] attribute to our crate, and declare a platform specific entrypoint ourselves.

Defining an Entry Point

To define an entry point, we need to understand the linker.

The linker is a program that is responsible to structure our code into segments, define entry point, define the output format, and also link other code to our program. This configuration is controlled by a linker script. For example, a very simple linker script may look like this

OUTPUT_FORMAT(binary)

ENTRY(main)

This will set our entry point to main, and our output into a raw binary, which means the binary header3 of the program will not be included

Then, to make our linker to use this script, we have mainly two options, one is to add some arguments to our build command, and the other one is to create a build script. In this guide we use the following build script.

use std::path::Path;

fn main() {

// Environment variable that stores the current working directory

let local_path = Path::new(env!("CARGO_MANIFEST_DIR"));

// This tells cargo to add the `-C link-arg=--script=./linker.ld` argument.

// Which will result in linking with our code with our linker script

println!(

"cargo:rustc-link-arg-bins=--script={}",

local_path.join("linker.ld").display()

)

}But, after we do all this and again, run cargo build, we get the same error, at first, this doesn't seem logical, because we defined a main function. But, although it is true that we defined one, we didn't consider Rust's default mangling.

This is a very clever idea done by Rust, and without it, things like this wouldn't be possible

struct A(u32);

impl A {

pub fn new(a: u32) -> A {

A(a)

}

}

struct B(u32);

impl B {

pub fn new(b: u32) -> B {

B(b)

}

}Because although the function are defined on different structs, they have the same name. But, because of mangling, the actual name of the function would be something like

A::new -> _ZN7mycrate1A3new17h5f3a92c8e3b0a1a2E

B::new -> _ZN7mycrate1B3new17h1c2d3e4f5a6b7c8dE

A similar thing is happening to our main function, which makes it name not to be exactly 'main' so the entry point is not recognized.

To fix it, we can add the #[unsafe(no_mangle)] attribute to our main function, which will make it's name to be just 'main'

Which makes this, our final main.rs file!

#![no_std]

#![no_main]

#[unsafe(no_mangle)]

fn main() {

}

#[panic_handler]

pub fn panic_handler(_info: &core::panic::PanicInfo) -> ! {

loop {}

}Build Target

On some platforms, like MacOS, the default target is not compatible with #![no_std] binaries, so we need to change it to a more compatible one, like x86_64-unknown-none. This target ensures that the binary will be compiled for a 64 bit architecture, without any operating system.

Note: Build targets will be discussed in more detail in the next chapter. You might have to install the target with

rustup target add x86_64-unknown-none

Including the Core Library

As a final note, you might have noticed that we are using the PanicInfo struct from the core library.

To include it, without specifying it on the build command every time, we can create a cargo configuration file, and add the following lines to it

[unstable]

build-std = ["core"]

build-std-features = ["compiler-builtins-mem"]

unstable-options = true

This will tell cargo to always add the following arguments to the build command

-Z build-std=core -Z build-std-features=compiler-builtins-mem -Z unstable-options

If you followed through, the code should now compile with the following command

cargo build --target x86_64-unknown-none

Although the code compiled, it still doesn't make it bootable, which is what I will cover in the next section

-

Linking is the process of combining compiled software builds so they can use each other functions ↩

-

A subsystem in our operating system that is responsible for managing memory ↩

-

Operating systems have their own binary header, so they can understand how to treat a binary, some common ones are ELF and PE ↩

Booting Our Binary

"There is no elevator to success - you have to take the stairs." - Zig Ziglar

In the previous section, we created a stand alone binary, which is not linked to any standard library. But if you looked closely, and inspected the binary, you would see that we used a build target that is called x86_64-unknown-none, which is a generic target that doesn't specify any operating system or vendor, but it still specifies the architecture as x86_64, which is the architecture of most modern computers.

Understanding Rust Targets

The compiler of rust, rustc is a cross-compiler, which means it can compile the same source code into multiple architectures and operating systems.

This provides us with a lot of flexibility, but it is the core reason for our problem. This is because you are probably compiling this code from a computer with a regular operating system (Linux, Windows or MacOS) which rustc supports, which means that is it's default target. To see your default target, you can run rustc -vV and look at the host section.

The target contains information for the rustc compiler about which header should the binary have, what is the pointer and int size, what instruction set to use, and more information about the features of the cpu that it could utilize.

So, because we compiled our code just with cargo build, cargo, which under the hood uses rustc, compiled our code to our default target, which resulted in a binary that is operating specific and not a truly stand alone even though we used #![no_std].

Note: if you want to see the information of your computer target, use the following command

rustc +nightly -Z unstable-options --print target-spec-json

Custom Rust Target

To boot our binary, we need to create a custom target that will specify that no vendor or operating system in our target triple is used, and that it will contain the right architecture. But, what architecture we need?

In this guide, the operating system that we build will be compatible with the x86_64 computer architecture (and maybe other architectures in the far far future). So, for that we will need to understand what an x86_64 chip expects at boot time.

BIOS Boot Process

When our computer (or virtual machine) powers on, the first software that the CPU encounters is the BIOS, which is a piece of software that is responsible to perform hardware initialization during the computer start up. It comes pre installed on the motherboard and as an OS developer, we can't interfere or modify the BIOS in any way.

The last thing BIOS does before handing to us the control over the computer, is to load one sector (512 bytes) form the boot device (can be hard-disk, cd-rom, floppy-disk etc) to memory address 0x7c00 if the sector is considered valid, which means that it has the BIOS Boot Signature at the end of it, which is the byte sequence 0x55 followed by 0xAA in offset bytes 510 and 511 respectively.

At this time for backward compatibility reasons, the computer starts at a reduced instruction set, at a 16bit mode called real mode which provides direct access to the BIOS interface, and access to all of the I/O or peripheral devices. This mode lacks support for memory protection, multitasking, or code privileges, and has only 1Mib of address space. Because of these limitation we want to escape it as soon as possible, but that is a problem that we will solve later (Maybe add link to when this is done).

Building Our Target

With this information, we understand that we will need to build a target that will support 16bit real mode. Unfortunately, if we look at all of the available targets, we would see that there is no target that support this unique need, but, luckily, Rust allows us to create custom targets!

As a clue, we can try and peak on the builtin targets, and check if there is something similar that we can borrow. For example, my target, which is the x86_64-unknown-linux-gnu looks like this:

{

"arch": "x86_64",

"cpu": "x86-64",

"crt-static-respected": true,

"data-layout": "e-m:e-p270:32:32-p271:32:32-p272:64:64-i64:64-i128:128-f80:128-n8:16:32:64-S128",

"dynamic-linking": true,

"env": "gnu",

"has-rpath": true,

"has-thread-local": true,

"link-self-contained": {

"components": [

"linker"

]

},

"linker-flavor": "gnu-lld-cc",

"llvm-target": "x86_64-unknown-linux-gnu",

"max-atomic-width": 64,

"metadata": {

"description": "64-bit Linux (kernel 3.2+, glibc 2.17+)",

"host_tools": true,

"std": true,

"tier": 1

},

"os": "linux",

...

}

This target has some useful info that we can use, like useful keys, such as arch, linker-flavor, cpu and more, that we will use in our target, and even the data-layout that we will copy almost entirely. Our final, 16bit target, will look like this:

{

// The general architecture to compile to, x86 cpu architecture in our case

"arch": "x86",

// Specific cpu target - Intel i386 CPU Which is the original 32-bit cpu

// Which is compatible for 16-bit real mode instructions

"cpu": "i386",

// Describes how data is laid out in memory for the LLVM backend, split by '-':

// e -> Little endianness (E for big endianness)

// m:e -> ELF style name mangling

// p:32:32 -> The default pointer is 32-bit with 32-bit address space

// p270:32:32 -> Special pointer type ID-270 with 32-bit size and alignment

// p271:32:32 -> Special pointer type ID-271 with 32-bit size and alignment

// p271:64:64 -> Special pointer type ID-272 with 64-bit size and alignment

// i128:128 -> 128-bit integers are 128-bit aligned

// f64:32:64 -> 64-bit floats are 32-bit or 64-bit aligned

// n:8:16:32 -> Native integers are 8-bit, 16-bit, 32-bit

// S128 -> Stack is 128-bit aligned

"data-layout": "e-m:e-p:32:32-p270:32:32-p271:32:32-p272:64:64-i128:128-f64:32:64-f80:32-n8:16:32-S128",

// No dynamic linking is supported, because there is no OS runtime loader.

"dynamic-linking": false,

// This target is allowed to produce executable binaries.

"executables": true,

// Use LLD's GNU compatible frontend (`ld.lld`) for linking.

"linker-flavor": "ld.lld",

// Use the Rust provided LLD linker binary (bundled with rustup)

// This makes that our binary can compiled on every machine that has rust.

"linker": "rust-lld",

// LLVM target triple, code16 indicates for 16bit code generation

"llvm-target": "i386-unknown-none-code16",

// The widest atomic operation is 64-bit (TODO! Check if this can be removed)

"max-atomic-width": 64,

// Disable position independent executables

// The position of this executable matters because it is loaded at address 0x7c00

"position-independent-executables": false,

// Disable the redzone optimization, which saves in advance memory

// on a functions stack without moving the stack pointer which saves some instructions

// because the prologue and epilogue of the function are removed

// this is a convention, which means that the guest OS

// won't overwrite this otherwise 'volatile' memory

"disable-redzone": true,

// The default int is 32-bit

"target-c-int-width": 32,

// The default pointer is 32-bit

"target-pointer-width": 32,

// The endianness, little or big

"target-endian": "little",

// panic strategy, also set on cargo.toml

// this aborts execution instead of unwinding

"panic-strategy": "abort",

// There is no target OS

"os": "none",

// There is not target vendor

"vendor": "unknown",

// Use static relocation (no dynamic symbol tables or relocation at runtime)

// Also means that the code is statically linked.

"relocation-model": "static"

}

Now, the only thing left to do before we can run our code, is to include the boot signature at our binary. This can be done in the linker script by adding the following lines:

SECTIONS {

/*

Make the start offset of the file 0x7c00 This is useful,

because if make jump to a function that it's offset in the binary is 0x100,

it will actually be loaded at address 0x7d00 by the BIOS, and not 0x100,

so we need to consider this offset, and that's how we do it.

*/

. = 0x7c00;

/*

Currently, we have nothing on the binary,

if we write the signature now, it will be at the start of the binary.

Because we want the signature to start at the offset of 510 in our binary,

we pad it with zeros.

*/

.fill : {

FILL(0)

. = 0x7c00 + 510;

}

/* Write the boot signature to make the sector bootable */

.magic_number : { SHORT(0xaa55) }

}

To compile our code, we just need to run the following command:

cargo build --release --target .\16bit_target.json

To see that indeed, the boot signature is in the correct place, we can use the Format-Hex command in windows or the hexdump command in Linux or MacOS to see the hex of our file.

This should result in a lot of zeros, and at the end, this line, where we can see the boot signature in the right offset

000001F0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 55 AA

Running Our Code

Because our code is experimental, we will not want to run it on our machine, because it can make PERMANENT DAMAGE to it. This is because we don't monitor cpu temperature, and other hardware sensors that can help us protect our pc. Instead, we will run our code in QEMU, which is a free and open-source full machine emulator and virtualizer. To download QEMU for your platform, follow the instructions here

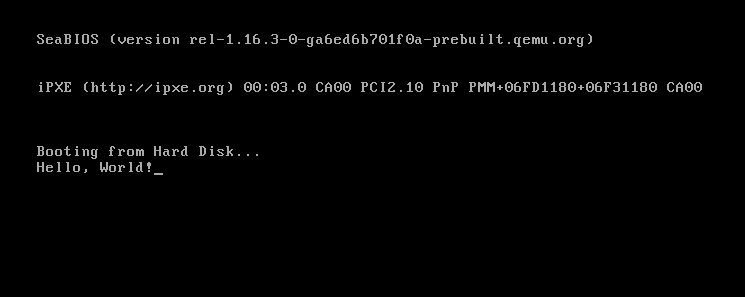

To make a sanity check that QEMU indeed works on your machine with our wanted architecture after you downloaded it, run qemu-system-x86_64 on a terminal. This should open a window and in it write some messages it tries to boot from certain devices, and after it fails, it should write it cannot find any bootable device. If that's what you are seeing, it all works as it should!

To provide our code, we need to add the -drive format=raw,file=<path-to-bin-file> flag to qemu, which will add to our virtual machine a disk drive with our code.

qemu-system-x86_64 -drive \

format=raw,file=<output_binary>

At a first glance, we might think our code still doesn't work, because all we see is a black screen, but, if you notice closely, we don't get more messages of the BIOS trying other boot devices, and we don't get the message of "No bootable device.".

So why we see black screen? This is because we didn't provide the computer any code to run and our main function is empty, but now we have the platform to write any code that we like!

Hello, World!

To print "Hello, World!", we can utilize the BIOS video interrupt which can help us print ASCII characters to the screen.

For now, don't worry about the code implementation and just use and play with it. This code piece, and a lot more will be explained in the next chapter.

use core::arch::asm;

#[unsafe(no_mangle)]

fn main() {

let msg = b"Hello, World!";

for &ch in msg {

unsafe {

asm!(

"mov ah, 0x0E", // INT 10h function to print a char

"mov al, {0}", // The input ASCII char

"int 0x10", // Call the BIOS Interrupt Function

// --- settings ---

in(reg_byte) ch, // {0} Will become the register with the char

out("ax") _, // Lock the 'ax' as output reg, so it won't be used elsewhere

);

}

}

unsafe {

asm!("hlt"); // Halt the system

}

}When we try to compile and run our code, we can see that it's indeed booting, but we don't see any massage.

If you believe me that the code above is correct, and indeed works, we can try and look at the binary file that the compiler emitted with the hexdump command in Linux or MacOS, or Format-Hex in Windows.

When we do that, we can notice that it seems that more code was added, but at the end of the file, and not at the start of it, and more over, it is located after the first sector which means it doesn't even loaded by the BIOS. To resolve this, we need to learn about the default segment rustc generates.

Default Segments In Rust

- .text - Includes the code of our program, which is the machine code that is generated for all of the functions

> fn some_function(x: u32, y: u32) -> u32 { return x + y; }- .data - Includes the initialized data of our program, like static variables.

> static VAR: u32 = 42;- .bss - Includes the uninitialized data of our program

> static mut MESSAGE: String = MaybeUninit::uninit();- .rodata - Includes the read-only data of our program

> static mut MESSAGE: &'static str = "Hello World!";- .eh_frame & .eh_frame_hdr - Includes information that is relevant to exception handling and stack unwinding. These section are not relevant for us because we use

panic = "abort".

So, to make our linker put the segments in the right position, we need to change the SECTION segment of our linker script to this.

SECTIONS {

. = 0x7c00;

/*

Rust also mangles segment names.

The "<segment_name>.*" syntax is used to also include all the mangles

*/

.text : { *(.text .text.*) }

.bss : { *(.bss .bss.*) }

.rodata : { *(.rodata .rodata.*) }

.data : { *(.data .data.*) }

/*

This segment is not relevant for us, because we use `panic = "abort"`,

but to be sure that nothing unexpected happens, we discard it.

*/

/DISCARD/ : {

*(.eh_frame .eh_frame.*)

*(.eh_frame_hdr .eh_frame_hdr.*)

}

. = 0x7c00 + 510;

.magic_number : { SHORT(0xaa55) }

}

Now, when we compile and run our code, we can see our message!

Debugging Our Operating System

"If debugging is the process of removing bugs, then programming must be the process of putting them in." - Edsger W. Dijkstra

Debugging is a crucial part for a good operating system, and especially on the start of the development when we still don't have good debugging methods, like printing, we need to use other methods.

By far the most annoying bug is the triple fault, which will be explained extensively in the Interrupts Chapter. In short, this is an error that is not recoverable, and the CPU will reset itself, and it looks like this:

Although this state is not recoverable, there are some methods to debug it even without having the ability to print.

Reverse Engineering our Code

One of the most useful methods to debug our code is to use a disassembler. A disassembler is a tool that takes binary code and converts it back into assembly language, which can help us understand what the CPU is executing at any given time.

By analyzing the assembly code, we can gain insights into the control flow and data manipulation happening within our operating system. This can be especially helpful when trying to identify the root cause of a bug or unexpected behavior.

Extracting Memory Dumps

Another useful debugging technique is to extract memory dumps. A memory dump is a snapshot of the contents of the system's memory at a specific point in time. By examining the memory dump, we can see the state of various variables, data structures, and the stack at the moment of failure.

This provides valuable information about CPU structures that we are loading to the CPU, which might cause the triple fault if we don't initialize them correctly.

A memory dump can be obtained with the following commands.

First, run the qemu virtual machine with the --monitor stdio option to enable the QEMU monitor interface in the terminal.

Then, in this terminal, run the following command:

(qemu) pmemsave <start_address> <size> <file name>

// For example

(qemu) pmemsave 0x1000 0x500 memory.dump

// This will create a dump of size 0x500 from 0x1000 - 0x1500.

Minimal Printing

Once we write our kernel, the first thing that we will do is to write a print method with formatting, because it is one of the best ways to debug our code.

In the bootloader, in a time we didn't write our print yet, we will mostly debug with the methods above, but we can for debug purposes print characters, and even small strings using the BIOS like we did in our Hello, World! program.

A Minimal Bootloader

"From a small spark may burst a mighty flame." - Dante Alighieri

Writing a bootloader is not an easy task, and it can include a lot of things. In this book we will write the minimal needed bootloader to load our kernel, and obtain information that is necessary to it.

In this chapter we will implement the following features:

- Setup registers and stack

- Enable the A20 line

- Read kernel from disk

- Load the global descriptor table

- Enable Paging

These features are enough, at least for the start of our kernel, and later in the book you will see we will implement more features like obtaining a memory map, enabling text mode, locate the kernel in the file system and more!

Legacy Legacy Legacy

"Compatibility means deliberately repeating other people's mistakes." - David Wheeler

When writing our bootloader and especially on the first stages we encounter a lot of legacy that needs to be handled. This legacy may come in multiple shapes, like bios interrupts, magic numbers and things that are needed to be initialized and most of this stuff will be covered in this chapter.

Note: from now on, each of the code blocks will be structured as they are in the real project, and every time a code file will have a path in it, that will be the same path in the real project. For example, our first stage will be located in the kernel/stages/first_stage directory.

Our project structure will include the following directories

- kernel -> For the kernel code, and booting stages.

- shared -> Shared crates that are relevant for multiple cases.

- build -> Will include build utilities, like targets, linker scripts and more.

The Cargo.toml in the root of the project will include a

workspacedefinition which will include all of the crates from our project.

Basic Initialization

At the start of our code, we want to zero out all of the memory segments in our machine, so all of the addresses that we will access will not be manipulated by the segments.

This manipulation can happen if the segments are not zeroed out, because certain instructions of the CPU will assume segments.

For example, the instruction mov, eax [0x1000] will assume address 0x1000 is prefixed by the ds register.

In 16bit real mode, the translation is as follows:

Physical address = (Segment * 0x10) + Specified Address

// For example, if we want to fetch data,

// and the data segment is 0x1000 and we want data at address 0x2000.

Final = 0x1000 * 0x10 + 0x2000

Which will result in the address 0x12000 instead of 0x2000

This technique results in a 20bit maximum address space instead of 16bit, which is 1Mib

So, to zero down all the segments we will use the following code:

//; This will define a boot section for this asm code,

//; which we can put at the start of our binary.

.section .boot, "awx"

.global start

.code16

start:

//; Disable interrupts

cli

//; zero segment registers

xor ax, ax

mov ds, ax

mov es, ax

mov ss, ax

mov fs, ax

mov gs, ax

Then, we want to initialize the stack and the direction flag.

It is important to state the stack at a position that will not overwrite our code, this could happen because our code and the local variables we save can be in the same place in memory which might cause a push instruction to overwrite an instruction that we need. because of the we initialize the stack at 0x7c00 which will ensure it will not happen, because that stack only grows down.

//; clear the direction flag (e.g. go forward in memory when using

//; instructions like lodsb)

cld

//; initialize stack

mov sp, 0x7c00

- Setup registers and stack

A20 Line

The next step in our initialization is to enable the A20 line which is a legacy pain that we need to handle. This is the 21st address line in our bus which is disabled by default due to compatibility reasons. Right now it is not a problem, because we can only access 1Mib of address space, but later in our operating system, we will want to be able to access all of the memory space we have, so we will need to enable this address line.

There are a lot of ways to enable the A20 line, the code we will use is a fast A20 that is implemented mostly on new chipsets, this method is DANGEROUS and on some chipsets it may do something else, or even DAMAGE the computer, and because of that, at least for now, we run this operating system ONLY on a virtual machine because we are not handling all of the cases.

Luckily, this method works on our QEMU virtual machine

//; Enable the A20 line via I/O Port 0x92

//; This method might not work on all motherboards

//; Use with care on real hardware!

enable_a20:

//; Check if a20 is already enabled

in al, 0x92

test al, 2

//; If so, skip the enabling code

jnz enable_a20_after

//; Else, enable the a20 line

or al, 2

and al, 0xFE

out 0x92, al

enable_a20_after:

- Enable the A20 line

Now, after enabling the a20 line, we want to load from the disk into memory the rest of the bootloader and of course, our kernel. This is not a trivial task, especially when we have less then 512 bytes of code to do so. But don't worry, because the BIOS will come to our help.

BIOS Interrupts

BIOS Interrupts are an interface that is provided by the BIOS, that will allow us to perform numerous operations to our system with just a few assembly instructions.

For now, you don't have to understand how interrupts work, and this topic will be explained deeply later.

What you do need to understand, is that each set of functions that the BIOS provides has a number

these sets are divided by topics, and the specific function in each set that we will want to use will also have a number that we will put in the a register.

For example, if we want to check our drive status, we will need interrupt number 0x13, which is the set of function that correspond for disk operations, and we will need function that corresponds to the number 0x1. (This information could be looked up here) then, calling the function will look like this:

; Set up registers per specification

...

; Set `ah` with the function code

mov ah, 1

; Call the relevant interrupt

int 0x13

; For this function, `ah` will contain the result, nonzero value means an error

; So we can parse the value as follows

test ah, ah

jnz disk_has_a_problem

Note: Most functions have a specification on the internet for what to put in each register, and in which register the output will be. the specification for the above function can be found here

Reading From Disk

To read our kernel from the disk, we can utilize two functions that are provided by the BIOS, the first one is int 0x13 ah=0x2 which is the read sector function, this is an older function that reads sectors from the disk into memory by providing the cylinder, head and sector. The other function is the int 0x13 ah=0x42 which is the extended read function, this is a newer function that reads from disk using the disk packet structure.

Both of these functions will be explained, and at the end, we will use the newer one.

Cylinder, Head and Sector

In today's works, there are multiple ways to store persistent information, SSD and NVMe which are newer storage hardware that provides fast access speeds to data and lower latency comparing to HDD which is an older technology that the BIOS works with.

To read from hdd, we first need to understand it's geometry.

Each disk contains multiple platters which are a magnetic disk that can store data, each platter can store information on both sides, so the number of heads is 2 * platters.

Each head of the disk is divided into inner circles which are called tracks, the set of aligned tracks on all of the heads is called a cylinder.

Finally the sector is the arc on the track that actually holds our data, sectors have common a commons size of 512 bytes, but sometimes have larger size.

With that information, we can understand that the disk uses a 3D coordinate system, and in order to specify which sector we want to read, we need to specify a cylinder number that the sector is in, then, provide the head number, in order to specify the track the sector is in, and then we provide the sector number in the track to get the actual sector that holds our data. This can be demonstrated with this picture:

Note: To obtain how many cylinders, heads and sectors are on a disk we can use the BIOS

int 0x13 ah=0x8function or theint 0x13 ah=0x48function

With that said, this is not a surprise that the simple, read sector BIOS function needs exactly this information.

; Set data segment to 0

xor ax, ax

mov ds, ax

; Number of sectors to read in `al`

mov al, 63

; Cylinder number in `ch`

mov ch, 0

; Sector number in cl

; The first sector is already loaded to memory by the BIOS

; And the sector count starts at 1 and not 0

mov cl, 2

; Head number in 'dh' 0

mov dh, 0

; `dl` should already contains the drive number from BIOS if not overrode.

; The buffer to read to is es:bx.

; Since BIOS loads 512 bytes at the start, the next empty address is 0x7e00

; This address can be represented in multiple ways because of segmentation

; For example es=0x7e0, bx=0 or es=0, bx=0x7e00

xor bx, bx

mov es, bx

mov bx, 7e00h

; Put function code in `ah`

mov ah, 2

; Call the function

int 13h

Logical Block Addressing

Although the Cylinder-Head-Sector model is quite accurate and specific, it is harder to understand, and requires the knowledge of the disk geometry.

Moreover, it is not compliant with other, newer disk hardware like SSD or NVMe.

Because of that, a better addressing scheme was proposed which is called Logical Block Addressing or LBA for short.

This is a linear address scheme, where each address is a sector, or so called data block.

This, unlike the sector count scheme is a zero-based address, which means the first block is at address 0, the second at address 1 and so on.

This address scheme is compatible to CHS addressing, and a CHS address can be translated to an LBA with the following formula:

$$ LBA = (C x N_{Heads Per Cylinder} + H) x K_{Sectors Per Track} + (S - 1) $$

This address can translate backwards, so an LBA address can become a CHS tuple with these formulas:

$$ Cylinder = \text{LBA} \div ({N_{Heads Per Cylinder}} \times K_{Sectors Per Track}) \ $$ $$ Head = (\text{LBA} \div {N_{Heads Per Cylinder}}) \bmod K_{Sectors Per Track} \ $$ $$ Sector = (\text{LBA} \bmod K_{Sectors Per Track}) + 1 $$

Disk Address Packet

After learning about LBA, the only logical thing to think, is how to read data from the disk using LBA instead of CHS.

This is where the extended read functions comes in, it expects a structure called the disk address packet which looks like this:

// The `repr(C)` means that the layout in memory will be as

// specified (like in C) because rust ABI doesn't state that

// this is promised.

//

// The `repr(packed) states that there will no padding due

// to alignment

#[repr(C, packed)]

pub struct DiskAddressPacket {

/// The size of the packet

packet_size: u8,

/// Zero

zero: u8,

/// How many sectors to read

num_of_sectors: u16,

/// Which address in memory to save the data

memory_address: u16,

/// Memory segment for the address

segment: u16,

/// The LBA address of the first sector

abs_block_num: u64,

}But, just before we use it, we need to check if this extension is available on our disk. This can be done with int 0x13 ah=0x41 which checks if all extended functions are available on our disk.

The check can be done with the following code:

check_int13h_extensions:

//; Set function constants `dl` already contains the driver

mov ah, 0x41

mov bx, 0x55aa

int 0x13

jnc .int13_pass

//; hlt system if there is no support

hlt

.int13_pass:

Because we are all using the same emulator, it should pass the hlt instruction and continue execution. Now to read from disk we can implement a read function to our disk packet.

This is quite straight forward, we will create a new function that will initialize our packet from basic inputs, create a load function that will call int 0x13 ah=0x42 for us with the packet to the right disk.

First, for organization, we will create some helpful enums.

#[repr(u8)]

/// BIOS interrupts number for each interrupt type used in

/// the kernel.

pub enum BiosInterrupts {

Video = 0x10,

Disk = 0x13,

Memory = 0x15,

}

#[repr(u8)]

/// Disk interrupt number for each function used in the

/// kernel.

pub enum DiskInterrupt {

ExtendedRead = 0x42,

}Then, we can create an initializer function for our disk packet

impl DiskAddressPacket {

pub fn new(

num_of_sectors: u16,

memory_address: u16,

segment: u16,

abs_block_num: u64,

) -> Self {

Self {

// The size of the packet

packet_size: size_of::<Self>() as u8,

// zero

zero: 0,

// Number of sectors to read, this can be a max of 128 sectors.

// This is because the address increments every time we read a

// sector. The largest number a register in this

// mode can hold is 2^16 When divided by a sector

// size, we get that we can read only 128 sectors.

num_of_sectors: num_of_sectors.min(128),

// The initial memory address

memory_address,

// The segment the memory address is in

segment,

// The starting LBA address to read from

abs_block_num,

}

}

}And then, finally the function that will call the interrupt with our packet, and will read the disk content into memory.

impl DiskAddressPacket {

pub fn load(&self, disk_number: u8) {

unsafe {

// This is an inline assembly block

// This block's assembly will be injected to the function.

asm!(

// si register is required for llvm it's content needs to be

// saved

"push si",

// Set the packet address in `si` and format it for a 16bit

// register

"mov si, {0:x}",

// Put function code in `ah`

"mov ah, {1}",

// Put disk number in `dl`

"mov dl, {2}",

// Call the `disk interrupt`

"int {3}",

// Restore si for llvm internal use.

"pop si",

in(reg) self as *const Self as u16,

const DiskInterrupt::ExtendedRead as u8,

in(reg_byte) disk_number,

const BiosInterrupts::Disk as u8,

)

}

}

}Read The Kernel

Now, all that left is to put it all together!

we can create a disk packet in our entry function, and load it!

But, just before we can do that, we need get some how the disk number we are in, and call our function.

The disk number that we booted from as used in above examples is in the dl register, so we can push it to the stack.

Then, use the no_mangle attribute on our function and call it by it's name.

Then, we can get the disk number from the stack, and load our packet.

//; push disk number into the stack will be at 0x7bfe

//; and call the first_stage function

push dx

call first_stage

And create a constant for the disk number memory address Then, in the first stage function

pub const DISK_NUMBER_OFFSET: u16 = 0x7BFE;

#[unsafe(no_mangle)]

pub fn first_stage() -> ! {

// Read the disk number the os was booted from

let disk_number =

unsafe { core::ptr::read(DISK_NUMBER_OFFSET as *const u8) };

// Create a disk packet which will load 4 sectors (512 bytes each)

// from the disk to memory address 0x7e00

// The address 0x7e00 was chosen because it is exactly one sector

// after the initial address 0x7c00.

let dap = DiskAddressPacket::new(

4, // Number of sectors

0, // Memory address

0x7e0, // Memory segment

1, // Starting LBA address

);

dap.load(disk_number);

}- Read kernel from disk

Although everything seems correct now, and data from the disk should now be in memory, it will still not compile and boot properly. But I will leave it as a challenge for you!

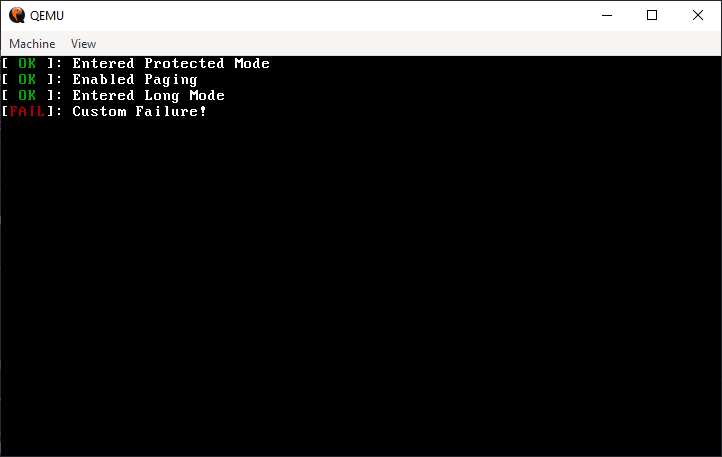

Entering Protected Mode

"With great power comes great responsibility." - Voltaire / Spider-Man

After we read from disk, it will enable us to write much more code, because we are not limited to 512 bytes.

But just before we do that, we don't want to limit ourselves only to 16bit instructions.

For that we need to enter protected mode which will allow us to unlock some cpu features such as 32bit instructions.

Entering protected mode requires us to initialize the global descriptor table which is a CPU structure that will be discussed in depth bellow, and toggling the protected mode bit in cr0

The Global Descriptor Table

This is a structure that is specific to the x86 cpu family, and it contains information about the different segments. In general, segments are used to divide memory into logical parts and as we seen in real mode, to also translate addresses.

In protected mode, the common way to organize memory is using these segments. Because segments registers can only hold one number,

they can't hold enough information for us, and that is where the global descriptor table comes in place.

The global descriptor table is an array of structures that include information about a segment,

when we want to use our custom segment, we load it's offset to the segment register.

For example, we can create a segment for user data at index one of our table.

this segment will not hold important data for the system, and will not contain code that can be executed,

if we want to load it into the ds we will set it to the offset of the structure in the table.

Instead of just revealing you the structure that is used for each segment, I want you to pause and ponder about what each segment should include.

Remember that some instructions assume segments, like mov, jmp etc. and we want segments for the kernel, users, date and code

When I asked myself this question, I came up with the following ideas:

- What is the initial address of the segment. i.e the start address in memory where the segment starts.

- What is the end address of the segment. i.e the end address in memory where the segment ends.

- What the segment includes. i.e data segment, code segment etc.

- What is the privilege level of the segment. i.e can anyone access it or only the kernel

- For a data segment, Is the data read only, or may I modify it?

- For a code segment, Can I execute it, or not yet.

Although this first guess of what the global descriptor table includes don't include everything, It is mostly accurate!

Our entry will look like this:

But what are these fields?

- Base: this is a 32-bit value, which is split on the entire entry and it represents the address of where the segment begins.

- Limit: this is a 20-bit value, which is split on the entire entry, and it represents the size of the segment.

- Access Byte: flags that are relevant to the memory range of the segment, like the access privileges of this segment.

- Flags: general flags that are relevant for the entry fields.

All of these fields can become a struct and together they will represent a single entry.

struct AccessByte(u8);

/// Low 4 bits limit high 4 bits flags

struct LimitFlags(u8);

#[repr(C, packed)]

struct GlobalDescriptorTableEntry32 {

limit_low: u16,

base_low: u16,

base_mid: u8,

access_byte: AccessByte,

limit_flags: LimitFlags,

base_high: u8,

}Both the AccessByte and the LimitFlags and more structures throughout the book, are using one bit flags, which represents some inner settings to the cpu.

Although setting one bit flag is easy, and can be done with 1 << bit_number to set the nth bit, we would like abstractions such as set_<flag_name>, which are more readable and error prone.

But, if we would do that to every flag, it will be A LOT of boiler plate code.

For this reason, rust provides us with an amazing macro system

Note: If you are unfamiliar with macros, and especially rust macros, a little explanation will be given in this book, to read more about rust's macros, click here

So, to mitigate all of this boiler plate, will will create a flag! macro.

The goal of this macro is to use the flag name, and it's bit number to generate utility functions that are readable and error prone.

Our macro will look like this:

/// This macro will obtain `flag_name` and the corresponding

/// `bit_number`

///

/// With this information it will automatically generate

/// three methods

///

/// 1. `set_$flag_name`: set the bit without returning self

/// 2. `$flag_name`: set the bit and will return self

/// 3. `unset_$flag_name`: unset the bit without returning self

/// 4. `is_$flag_name`: return true if the flag is set or false if not

#[proc_macro]

pub fn flag(input: TokenStream) -> TokenStream {

let FlagInput { name, bit, .. } =

syn::parse_macro_input!(input as FlagInput);

// build identifiers

let name_str = name.to_string();

let set_ident = format_ident!("set_{}", name_str);

let unset_ident = format_ident!("unset_{}", name_str);

let is_ident = format_ident!("is_{}", name_str);

let expanded = quote! {

#[inline]

#[allow(dead_code)]

#[allow(unused_attributes)]

/// Sets the corresponding flag

pub const fn #set_ident(&mut self) {

self.0 |= 1 << #bit;

}

#[inline]

#[allow(dead_code)]

#[allow(unused_attributes)]

/// Sets the corresponding flag while returning self

pub const fn #name(self) -> Self {

Self(self.0 | (1 << #bit))

}

#[inline]

#[allow(dead_code)]

#[allow(unused_attributes)]

/// Unset the corresponding flag

pub const fn #unset_ident(&mut self) {

self.0 &= !(1 << #bit);

}

#[inline]

#[allow(dead_code)]

#[allow(unused_attributes)]

/// Checks if the corresponding flag is set

pub const fn #is_ident(&self) -> bool {

(self.0 & (1 << #bit)) != 0

}

};

expanded.into()

}While this macro seems complex, it will just create four functions that will help up set, unset and read the flag.

To see what this macro generated, we can you the amazing cargo-expand tool created by David Tolnay

To see an example

A simple code like this:

struct Example(u8);

impl Example {

flag!(first, 1);

flag!(second, 2);

flag!(third, 3);

}Will be expanded to this:

struct Example(u8);

impl Example {

#[inline]

#[allow(dead_code)]

#[allow(unused_attributes)]

/// Sets the corresponding flag

///

/// `This method is auto-generated`

pub const fn set_first(&mut self) {

self.0 |= 1 << 1;

}

#[inline]

#[allow(dead_code)]

#[allow(unused_attributes)]

/// Sets the corresponding flag while returning self

///

/// `This method is auto-generated`

pub const fn first(self) -> Self {

Self(self.0 | (1 << 1))

}

#[inline]

#[allow(dead_code)]

#[allow(unused_attributes)]

/// Unset the corresponding flag

///

/// `This method is auto-generated`

pub const fn unset_first(&mut self) {

self.0 &= !(1 << 1);

}

/// Checks if the corresponding flag in set to 1

///

/// `This method is auto-generated`

#[inline]

#[allow(dead_code)]

#[allow(unused_attributes)]

pub const fn is_first(&self) -> bool {

self.0 & (1 << 1) != 0

}

#[inline]

#[allow(dead_code)]

#[allow(unused_attributes)]

/// Sets the corresponding flag

///

/// `This method is auto-generated`

pub const fn set_second(&mut self) {

self.0 |= 1 << 2;

}

#[inline]

#[allow(dead_code)]

#[allow(unused_attributes)]

/// Sets the corresponding flag while returning self

///

/// `This method is auto-generated`

pub const fn second(self) -> Self {

Self(self.0 | (1 << 2))

}

#[inline]

#[allow(dead_code)]

#[allow(unused_attributes)]

/// Unset the corresponding flag

///

/// `This method is auto-generated`

pub const fn unset_second(&mut self) {

self.0 &= !(1 << 2);

}

/// Checks if the corresponding flag in set to 1

///

/// `This method is auto-generated`

#[inline]

#[allow(dead_code)]

#[allow(unused_attributes)]

pub const fn is_second(&self) -> bool {

self.0 & (1 << 2) != 0

}

#[inline]

#[allow(dead_code)]

#[allow(unused_attributes)]

/// Sets the corresponding flag

///

/// `This method is auto-generated`

pub const fn set_third(&mut self) {

self.0 |= 1 << 3;

}

#[inline]

#[allow(dead_code)]

#[allow(unused_attributes)]

/// Sets the corresponding flag while returning self

///

/// `This method is auto-generated`

pub const fn third(self) -> Self {

Self(self.0 | (1 << 3))

}

#[inline]

#[allow(dead_code)]

#[allow(unused_attributes)]

/// Unset the corresponding flag

///

/// `This method is auto-generated`

pub const fn unset_third(&mut self) {

self.0 &= !(1 << 3);

}

/// Checks if the corresponding flag in set to 1

///

/// `This method is auto-generated`

#[inline]

#[allow(dead_code)]

#[allow(unused_attributes)]

pub const fn is_third(&self) -> bool {

self.0 & (1 << 3) != 0

}

}So now, without a lot of boiler plate, we can define our AccessByte and LimitFlags.

We will also define an enum that will include the protection level, so it would be more clear

pub enum ProtectionLevel {

Ring0 = 0,

Ring1 = 1,

Ring2 = 2,

Ring3 = 3,

}

impl AccessByte {

/// Creates an access byte with all flags turned off.

pub const fn new() -> Self {

Self(0)

}

// Is this a valid segment?

// for all active segments this should be turned on.

flag!(present, 7);

/// Sets the privilege level while returning self.

/// This is corresponding to the cpu ring of this

/// segment 0 is commonly called kernel mode, 4 is

/// commonly called user mode

pub const fn dpl(mut self, level: ProtectionLevel) -> Self {

self.0 |= (level as u8) << 5;

self

}

// Is this a code / data segment or a system segment.

flag!(code_or_data, 4);

// Will this segment contains executable code?

flag!(executable, 3);

// Will the segment grow downwards?

// relevant for non executable segments

flag!(direction, 2);

// Can this code be executed from lower privilege

// segments. relevant to executable segments

flag!(conforming, 2);

// Can this segment be read or it is only executable?

// relevant for code segment

flag!(readable, 1);

// Is this segment writable?

// relevant for data segments

flag!(writable, 1);

}

impl LimitFlags {

/// Creates a default limit flags with all flags turned

/// off.

pub const fn new() -> Self {

Self(0)

}

// Toggle on paging for this segment (limit *= 0x1000)

flag!(granularity, 7);

// Is this segment going to use 32bit mode?

flag!(protected, 6);

// Set long mode flag, this will also clear protected

// mode

flag!(long, 5);

}Now, just before creating a new function to our entry, we don't want each time to specify the base in three parts and the limit in two parts, instead we want the new function to take care of that.

This will complicate it a bit, but will provide much more friendly interface.

/// Initial temporary GDT

#[repr(C, packed)]

pub struct GlobalDescriptorTableProtected {

null: GlobalDescriptorTableEntry32,

code: GlobalDescriptorTableEntry32,

data: GlobalDescriptorTableEntry32,

}

impl GlobalDescriptorTableEntry32 {

pub const fn new(

base: u32,

limit: u32,

access_byte: AccessByte,

flags: LimitFlags,

) -> Self {

// Split base into the appropriate parts

let base_low = (base & 0xffff) as u16;

let base_mid = ((base >> 0x10) & 0xff) as u8;

let base_high = ((base >> 0x18) & 0xff) as u8;

// Split limit into the appropriate parts

let limit_low = (limit & 0xffff) as u16;

let limit_high = ((limit >> 0x10) & 0xf) as u8;

// Combine the part of the limit size with the flags

let limit_flags = flags.0 | limit_high;

Self {

limit_low,

base_low,

base_mid,

access_byte,

limit_flags: LimitFlags(limit_flags),

base_high,

}

}

}Jumping to the next stage!

Now, after understanding the global descriptor table, we want to jump to the next stage. This will require us to create and load a temporary global descriptor table.

Each table must have at least three entries, an initial null entry that is filled with zeros, which is always required as the first entry, a data entry for the data segment so we can read and write to memory, and code entry so we can execute code.

Together it will all look like this:

impl GlobalDescriptorTableProtected {

pub const fn default() -> Self {

Self {

null: GlobalDescriptorTableEntry32::empty(),

code: GlobalDescriptorTableEntry32::new(

0,

0xfffff,

AccessByte::new()

.present()

.dpl(ProtectionLevel::Ring0)

.code_or_data()

.executable()

.readable(),

LimitFlags::new().granularity().protected(),

),

data: GlobalDescriptorTableEntry32::new(

0,

0xfffff,

AccessByte::new()

.present()

.dpl(ProtectionLevel::Ring0)

.code_or_data()

.writable(),

LimitFlags::new().granularity().protected(),

),

}

}

}If you noticed, all of the functions that we defined so far are marked with const this is useful because we can create our global descriptor table as a static variable, which will be in the binary.

This is useful because it will make our initialization of the global descriptor table to be in compile time.

So, the only thing left to do is to load the global descriptor table. This can be done with the lgdt instruction which loads the Global Descriptor Table Register with our table. This is a hidden register that includes information about our global descriptor table, like it's size and address in memory.

We will create a load function that will create this register structure, and will load it to the cpu.

impl GlobalDescriptorTableProtected {

pub unsafe fn load(&'static self) {

let gdtr = {

GlobalDescriptorTableRegister {

limit: (size_of::<Self>() - 1) as u16,

base: self as *const _ as usize,

}

};

unsafe {

instructions::lgdt(&gdtr);

}

}

}Now, to apply all of the created functionality, enable protected mode, and to jump to the next stage, we need add the following code to our entry function.

But just before that, when we jump to the next stage, we need to specify the offset in the GDT of the relevant section we want to jump to. In out case it is the kernel_code section, which will allow us to run code on ring0. For an easy way to specify the section, we will create an enum.

// Notice that this also contains segments of other GDT

// that we will use in the future

#[repr(u8)]

pub enum Sections {

Null = 0x0,

KernelCode = 0x8,

KernelData = 0x10,

UserCode = 0x18,

UserData = 0x20,

TaskStateSegment = 0x28,

}

static GLOBAL_DESCRIPTOR_TABLE: GlobalDescriptorTableProtected =

GlobalDescriptorTableProtected::default();

pub fn first_stage() -> ! {

// Load Global Descriptor Table

GLOBAL_DESCRIPTOR_TABLE.load();

// Set the Protected Mode bit and enter Protected Mode

asm!(

"mov eax, cr0",

"or eax, 1",

"mov cr0, eax",

options(readonly, nostack, preserves_flags)

);

// Jump to the next stage

// The 'ljmp' instruction is required to because it updates the cpu

// segment to the new ones from our GDT.

//

// The segment is the offset in the GDT.

// (KernelCode = 0x10 which is the code segment)

asm!(

"ljmp ${segment}, ${next_stage_address}",

segment = const Sections::KernelCode as u8,

next_stage_address = const SECOND_STAGE_OFFSET,

options(att_syntax)

);

}- Load the global descriptor table

What is Memory Paging?

"The purpose of abstraction is not to be vague, but to create a new semantic level in which one can be absolutely precise." - Edsger W. Dijkstra

In the previous section, we talked about the global descriptor table, and how segments are used to divide memory into logical parts so it is easier to manage. Although this system worked in older 32bit operating systems, it will not be good for our operating system, but way is that?

The Problem in Memory Segmentation

Before we define paging, let's understand what we want for our operating system memory management. For starters, I can think about the following things:

- Basic permissions, i.e Read, Write and Execute

- Kernel mode and User mode.

- Every process has it's own address space.

At first glance, we may see that all of this can be achieved via segmentation, because we can create multiple segments for process code, data etc which creates the process separation, and each segment have the read, write and execute permissions, while also providing the cpu rings for kernel and user mode. So why would we want another system for managing memory?

Let's draw a scenario, we will have three processes, A and B, and we will look at our memory, for convenience, we will manage memory at multiplications of 0x100.

As we can see, every program has it's own memory, additionally, we can define segments likes coda_a, data_a, stack_a etc, so we have organization and permission control. But this picture demonstrates a major problem that there is with segmentation, can you spot it? if not that's fine.

Let's now assume that process B wants more memory, it asks the operating system for another 0x100 bytes. Because the bytes that are contiguous to this process are free, this can be done without any problem and it can just be extended. But, process A is in a problem, and it now needs another buffer of 0x400 bytes, although we do have this amount of free memory, we can't give it to him because it is not contiguous to it. This problem is called fragmentation.

So now you might ask, how can we solve this problem?

I suggest you to think how would you solve this fragmentation problem!

As always, the explanation of the solution that is used today will be bellow

Core Terms

Just before explaining how paging works, let's define some core terms.

-

Physical Memory - This is the actual memory that is used, and it has

absoluteaddresses, and it is the address space that our hardware talks. -

Virtual Memory - This is the address space of our processes, because we want to make an illusion that each process has it's own address space, addresses are absolute only

inside the process, For example, process A address 0x100 represents other region of memory then process B address 0x100. Both of these addresseswilltranslate into a differentphysical addressso we can read and write data to it.

The concept of virtual memory is not new to us. For example, when we discussed before about creating different segments for each process, we created a virtual memory space for each process in terms of accessing data or executing code. These addresses would then

translateto a physical address as we defined in the global descriptor table

Introduction to Paging

Just before explaining paging, I want you to know that there are

four paging modes1 and they all cover the same concepts, and the key difference is the tables layout. We are going to talk about the third type, which is called '4-level paging' and is mostly used on modern computers.

In memory paging we divide our physical memory into units that are called pages, which are blocks of contiguous memory of fixed size (4Kib, 2Mib or 1Gib). Then we create a mapping between the virtual address space, and the physical one. Each process holds a different mapping, hence a different virtual address space.

In the figure bellow we can see this mapping.

for simplification, I changed the block size to 0x100 instead of 0x1000 (4096 bytes) but the principles are still the same.

In this example we can see that even if process B wants more memory, it is not blocked by process A, and can just ask our operating system for more memory and it will map it just after process A thus solving the fragmentation problem. You may also notice that I marked some memory as "Used For Paging" this is because the mapping itself takes some memory and it is not a small portion.

How Addresses Are Translated

When we used segments we knew how to translate virtual address into a physical one. We would go to the appropriate entry in the global descriptor table, and we would take the base address of it, and add it to our virtual address, which would give us the physical address.

In paging the address translation process is a bit more complicated, and it is done with four hierarchical tables.

The official names for those tables are Page-Map Level 4 (PML4), Page Directory Pointer Table (PDT), Page Directory Table (PDT) and Page Table (PT).

In this book I will not use these names because they are complicated, and I am just going to number each level, PML4 being the 4th level, and PT being the 1st level.

Page Table & Page Table Entry

The page table, just like the global descriptor table, is an array of 512 page table entries.

Each entry contains a physical address aligned to 0x1000, that is pointing to a memory regions, and also flags represents configuration and permissions for the memory page mapped by the entry.

On a typical entry, there are 8 flags that are used with an optional 12 flags in total, in our operating system we will configure some of the optional flags, but not all of them.

macro_rules! table_entry_flags {

() => {

// Is this page present?

learnix_macros::flag!(present, 0);

// Is this page writable?

learnix_macros::flag!(writable, 1);

// Can this page be accessed from user mode

learnix_macros::flag!(usr_access, 2);

// Writes go directly to memory

learnix_macros::flag!(write_through_cache, 3);

// Disable cache for this page

learnix_macros::flag!(disable_cache, 4);

// This flag can help identifying if an entry is the

// last one, or it is pointing to another directory

// Is this page points to a custom memory address

// and not a page table?

learnix_macros::flag!(huge_page, 7);

// Page isn't flushed from caches on address space

// switch (PGE bit of CR4 register must be set)

learnix_macros::flag!(global, 8);

// 9-11 are custom custom flags for our use

// mark a table as full

learnix_macros::flag!(full, 9);

// This entry points to a table

learnix_macros::flag!(table, 10);

// This entry is at the top of the hierarchy.

learnix_macros::flag!(root_entry, 11);

// This page is holding data and is not executable

learnix_macros::flag!(not_executable, 63);

};

}The page table entry is just be a u64, and the page table is an array of page entries with 512 entries.

#[derive(Debug, Clone)]

pub struct PageTableEntry(pub u64);

impl PageTableEntry {

table_entry_flags!();

#[inline]

pub(crate) const fn empty() -> Self {

Self(0)

}

}#[repr(C)]

#[repr(align(4096))]

#[derive(Debug)]

pub struct PageTable {

pub entries: [PageTableEntry; PAGE_DIRECTORY_ENTRIES],

}

impl PageTable {

/// Create an empty page table

#[inline]

pub const fn empty() -> Self {

Self {

entries: {

[const { PageTableEntry::empty() }; PAGE_DIRECTORY_ENTRIES]

},

}

}